One of the things I wanted to do with the IDRC Data Sharing Pilot Project that we’ve just published was to try and demonstrate some best practice. This became more important as the project progressed and our focus on culture change developed. As I came to understand more deeply how much this process was one of showing by doing, for all parties, it became clear how crucial it was to make a best effort.

This turns out to be pretty hard. There are lots of tools out there to help with pieces of the puzzle, but actually sharing a project end-to-end is pretty rare. This means that the tools that help with one part don’t tend to interconnect well with others, at multiple levels. What I ended up with was tangled mess of dependencies that were challenging to work through. In this post I want to focus on the question of how easy it is to connect up the multiple of objects in a project via citations.

The set of objects

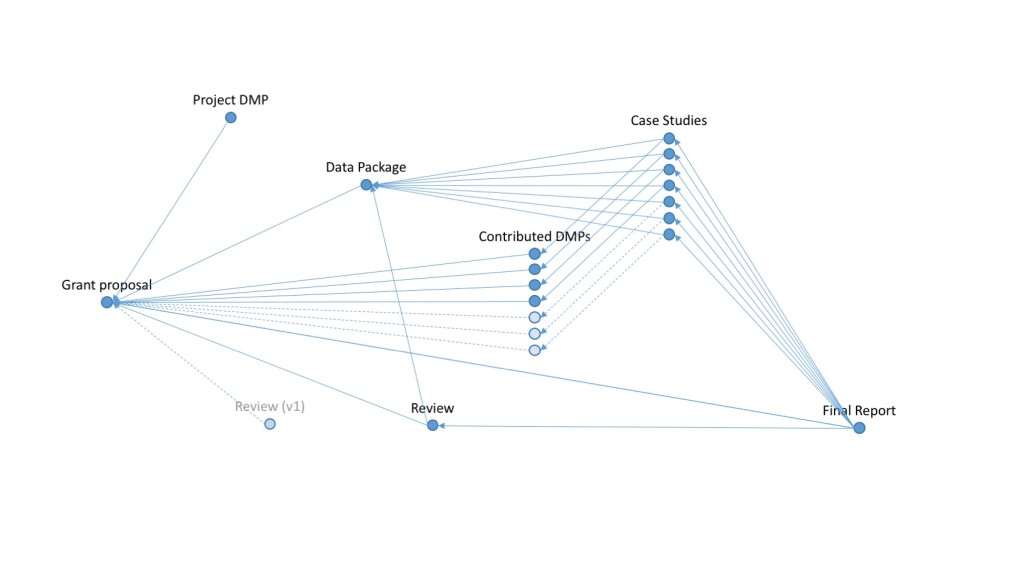

The full set of objects to be published from the project were:

- The grant proposal – this acts as a representation of the project as a whole and we published it quite early on in RIO

- The literature and policy review – this was carried out relatively early on but took a while to publish. I wanted it to reference the materials from expert interviews that I was intended to deposit but this got complicated (see below)

- The Data Management Plan for the project – again published in RIO relatively early on in the process

- Seven Data Management Plans – one from each of the participating projects in the pilot

- Seven Case Studies – one on each of the participating projects. This in turn needed to be connected back to the data collected, and ideally would reference the relevant DMP.

- The final report – in turn this should reference the DMPs and the case studies

- The data – as a data sharing project we wanted to share data. This consisted of a mix of digital objects and I struggled to figure out how to package it up for deposit, leading in the end to this epic rant. The solution to that problem will be the subject for the next post.

Alongside this, we wanted as far as possible to connect things up via ORCID and to have good metadata flowing through Crossref and Datacite systems. In essence we wanted to push as far as is possible in populating a network of connections to build up the map of research objects.

The problem

In doing this, there’s a fairly obvious problem. If we use DOIs (whether from Crossref or DataCite) as identifiers, and we want the references between objects to be formal citations, that are captured by Crossref as relationships between objects, then we can only reference in one direction. More specifically, you can’t reference an object until its DOI is registered. Zenodo lets you “reserve” a DOI in advance, but until it is registered formally (with DataCite in this case) none of the validation or lookup systems work. This citation graph is a directed graph, so ordering matters. On top of this, you can’t update it after formal publication (or at least not easily)

The effect of this is that if you want to get the reference network right, and if “right” is highly interconnected as was the case here then publication has to proceed in a very specific order.

In this case the order was:

- The grant proposal – all other objects needed to refer to “the project”

- The data set – assuming there was only one. It may have been better to split it but its hard to say. The choice to have one package meant that it had to be positioned here.

- The review – because it needed to reference the data package

- The contributing project Data Management Plans – these could have gone either second or third but ended up being the blocker

- The Case Studies – because they need to reference review, data package, project and Data Management Plans

- The final report – has to go last because it refers to everything else

In practice, the blocker became the Data Management Plans. Many of the contributing projects are not academic, formal publishing doesn’t provide any particular incentive for them and they had other things to worry about. But for me, if I was to formally reference them, they had to be published before I could release the Case Studies or final report. In the end four DMPs were published and three were referenced by the documents in the Data Package. Some of the contributors had ORCIDs that got connected up via the published DMPs but not all.

The challenges and possible solutions

The issue here was that the only way in practice to make this work was to get everything lined up, and then release carefully over a series of days once each of the objects were released by the relevant publisher (Zenodo and Pensoft Publishing for RIO). This meant a delay of months as I tried to get all the pieces fully lined up. For most projects this is impractical. The best way to get more sharing is to share early, once each object is ready. If we have to wait until everything is perfect it will never happen, researchers will lose interest, and much material will either not be shared, or not get properly linked up.

One solution to this is to reserve and pre-register DOIs. Crossref is working towards “holding pages” that allow a publisher to register a DOI before the document is formally released. However this is intended to operate on acceptance. This might be ok for a journal like RIO where peer review operates after publication and acceptance is based on technical criteria, but it wouldn’t have worked if I had sought to publish in a more traditional journal. Registering Crossref DOIs that will definitely get used in the near future may be ok, registering ones that may never resolve to a final formally published document would be problematic.

The alternative solution is to somehow bundle all the objects up into a “holding package” which, once it is complete triggers the process of formal publication and identifier registration. One form of this might be a system, a little like that imagined for machine-actionable DMPs where the records get made and connections stored until, when things are ready the various objects get fired off to their formal homes in the right order. There are a series of problems here as well though. This mirrors the preprint debate. If its not a “real” version of the final thing does that not create potential confusion? The system could still get blocked by a single object being slow in acceptance. This might be better than a researcher being blocked, the system can keep trying without getting bored, but it will likely require researcher intervention.

A different version might involve “informal release” by the eventual publisher(s), with the updates being handled internally. Again recent Crossref work can support this through the ability of DOIs for different versions of objects to point forward to the final one. But this illustrates the final problem, it requires all the publishers to adopt these systems.

Much of what I did with this project was only possible because of the systems at RIO. More commonly there are multiple publishers involved, all with subtly different rules and workflows. Operating across Zenodo and RIO was complex enough, with one link to be made. Working across multiple publishers with partial and probably different implementations of the most recent Crossref systems would be a nightmare. RIO was accommodating throughout when I made odd requests to fix things manually. A big publisher would likely have not bothered. The fiddly process of trying to get everything right was hard enough, and I knew what my goals were. Someone with less experience, trying to do this across multiple systems would have given up much much earlier.

Conclusions and ways forward (and backwards)

A lot of what I struggled with I struggled because I wanted to leverage the “traditional” and formal systems of referencing. I could have made much more progress with annotation or alternative modes of linking, but I wanted to push the formal systems. These formal systems are changing in ways that make what I was trying to do easier. But it’s slow going. Many people have been talking about “packages” for research projects or articles, the idea of being able to easily grab all the materials, code, data, documents, relevant to a project with ease. For that need much more effective ways of building up the reference graph in both directions.

The data package, which is the piece of the project where I had the most control over internal structure and outwards references, does not actually point at the final report. It can’t because we didn’t have the DOI for that when we put the data package together. The real problem here is the directed nature of the graph. The solution lies in some mechanism that either lets up update reference lists after publication or that lets us capture references that point in both directions. The full reference graph can only be captured in practice if we look for links running both from citing to referenced object and from referenced object to citing object, because sometimes we have to publish them in the “wrong” order.

How we manage that I don’t know. Update versioned links that simply use web standards will be one suggestion. But that doesn’t provide the social affordances and systems of our deeply embedded formal referencing systems. The challenge is finding flexibility as well as formality and making it easier to wire up the connections to build the full map.