A note on changes: I’m going to vary my usual practice in this series and post things in a rawer form with the intention of incorporating feedback and comments over time. In the longer term I will aim to post the series in a “completed” form in one way or another as a resource. If there is interest then it might be possible to turn it into a book.

There is no statement more calculated to make a publisher’s blood boil than “Publishers? They just organise peer review” or perhaps “…there’s nothing publishers do that couldn’t be done cheaper and easier by academics”. By the same token there is little that annoys publishing reform activists, or even most academics, more than seeing a huge list of the supposed “services” offered by publishers, most of which seem unfamiliar at best and totally unnecessary, or even counter productive at worst.

Much of the disagreement over what scholarly publishing should cost therefore turns on a lack of understanding on both sides. Authors are unaware of much of what publishing actually involves in practice, and in particular how the need for safeguards is changing. Publishers, steeped in the world of how things have been done tend to be unaware of just how ridiculous the process looks from the outside and in defending the whole process are slow, or in some cases actively antagonistic, to opening up a conversation with authors about which steps are really necessary or wanted, and whether or not anything can be easily taken away (or equally added to the mix).

And both sides fail to really understand the risks and costs that the other sees in change.

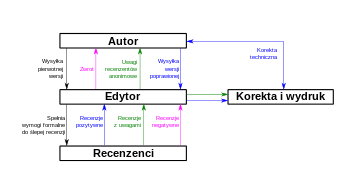

So what is it that publisher do in fact do? And why? Publishers manage some sort of system for submitting manuscripts, manage the peer review process, and then convert the manuscript into “the published version”. This last part of the process, which involves the generation of versions in specific formats, getting DOIs registered and submitting metadata, as well as providing the web platform for actually hosting articles, is a good place to start.

Production and Publication

This process has plenty of bits that look as though they should be cheap. Getting identifiers (around $1 an article) and hosting (surely next to nothing?) look as though they should be cheap and at one level they are. The real cost in minting a DOI however is not the charge from Crossref but the cost of managing the process. Some publishers are very good at managing this (Elsevier have an excellent and efficient data pipeline for instance) while small publishers tend to struggle because they manage it manually. Hosting also has complications; the community expects high availability and rapid downloads, and this is not something that can be done on the cheap. High quality archiving to preserve access to content in the long term is also an issue.

Nonetheless this part of the process need not be the most expensive. Good systems at scale can make these final parts of the publishing process pretty cheap. The more expensive part is format conversion and preparation for publication. It is easy to say (and many people do) that surely this should be automated and highly efficient. It is easy to say because its true. It should. But “should” is a slippery word.

If documents came into this process in a standardised form it would be easy. But they don’t. And they don’t on a large scale. One of the key elements we will see over and over again in this series is that scholarly publishing often doesn’t achieve economics of scale because its inputs are messy and heterogeneous. Depending on the level of finish that a publisher wants to provide, the level of cleanup required can differ drastically. Often any specific problem is a matter of a few minutes work, but every fix is subtly different.

This is the reason for the sometimes seemingly arbitrary restrictions publishers place on image formats or resolutions, reference formats or page layouts. It is not that differences cannot be accomodated, it is that to do so involves intervention in a pipeline that was never designed, but has evolved over time. Changing some aspect is less a case of replacing one part with a more efficient newer version, and more a case of asking for a chicken to be provided with the wings of a bat.

Would it not be better to re-design the system? To make it a both properly designed and modular. Of course the answer is yes, but its not trivial, particularly at scale. Bear in mind that for a large publisher stopping the pipeline may mean they would never catch up again. More than this, such an architectural redesign requires not just changes at the end of the process but at the beginning. As we will see later in the series, new players and smaller players can have substantial advantages here, if they have the resources to design and build systems from scratch.

It’s not so bad. Things are improving, pipelines are becoming more automated and less and less of the processing of manuscripts to published articles is manual. But this isn’t yet accruing large savings because the long tail of messy problems was always where the main costs were. Much of this could be avoided if authors were happy with less finish on the final product, but where publishers have publicly tried this, for instance by reducing copy editing, not providing author proofs, or simply not investing in web properties there is usually a backlash. There’s a conversation to be had here about what is really needed and really wanted, and how much could be saved.

Managing Peer Review

The part of the process where many people agree there is value is in the management of peer review. In many cases the majority of labour contributed here is donated by researchers, particularly where the editors are volunteer academics, however there is a cost to managing the process, even if, as is the case for some services that is just managing a web property.

One of the big challenges is discussing the costs and value added in managing peer review is that researchers who engage in this conversation tend to be amongst the best editors and referees. Professional publishers on the other hand tend to focus on the (relatively small number of) contributors, who are, not to put too fine a point on it, awful. Good academic editors tend to select good referees who do good work, and when they encounter a bad referee they discount it and move on. Professional staff spend the majority of their time dealing with editors who have gone AWOL, referees who are late in responding, or who turn out to be inappropriate either in what they have written or their conflicts of interest, or increasingly who don’t even exist!

An example may be instructive. Some months ago a scandal blew up around the reviews of an article where the reviewer suggested that the paper would be improved if it had some male co-authors on it. Such a statement is inappropriate and there was justifiably an outcry. The question is who is responsible for stopping this happen? Should referees be more thoughtful, well yes, but training costs money as well and everyone makes mistakes. Should the academic editor have caught it? Again thats a reasonable conclusion but what is most interesting is that there was a strong view from the community that the publishing staff should have caught it. “That referee’s report should never have been sent back to the author…” was a common comment.

Think about that. The journal in question was PLOS ONE, publishing tens of thousands of papers a year, handling some amount more than that, with a few referees reports each. Lets say 100,000 reports a year. If someone needs to check and read every referee’s report and each one took 20 minutes on average (remember the long tail is the problem) then thats about four people working full time just reading the reports (before counting in the effort of getting problems fixed). You could train the academic editors better but with thousands of editors the training would also take about the same number of people to run it. And this is just checking one part of one piece of the process. We haven’t begun to deal with identifying unreported conflicts of interest, managing other ethical issues, resolving disagreements etc etc etc.

Much of the irritation you see from publishers when talking about why managing peer review is more than “sending a few emails” relates to this gap in perception. The irony is that the problems are largely invisible to the broader community because publishers keep them under wraps, hidden away so that they don’t bother the community. Even in those cases where peer review histories are made public this behind the scenes work is never released. Academic Editors see a little more of it but still really only the surface, at least on larger journals and with larger publishers.

And here the irony of scale appears again. On the very smallest journals, where academics really are doing the chasing and running, there are also much fewer problems. There are fewer problems precisely because these small and close-knit communities know each other personally. In those niches the ethical and technical issues that are most likely to arise are also well understood by referees and editors. As a journal scales up this personal allegiance drops away, the problems increase, and the less likely that any given academic editor will be able to rely on their own knowledge of all the possible risks. We will return to this diseconomy of scale, and how it is or is not balanced by economies of scale that can be achieved again and again in this series.

A key question here is who is bearing the risk of something going wrong. In some cases editors are closely associated with a journal and bear some or much of that risk personally. But this is rare. And as we’ll see, with that personal interaction any slip ups are much more likely to be forgiven. Generally the risk lies primarily with the brand of the journal, and that appears as a financial risk to the publisher. Damage to the brand leads to weaker submissions and that is a substantial danger to viability. That risk is mitigated by including more checks throughout, those checks require staff, and those staff cost money. When publishers talk about “supporting the community’s review process” what they are often thinking is “preventing academics from tripping up and making a mess”.

Submission systems and validation

Probably the least visible part of what publishers do is the set of processes that occur before formal peer review even starts. The systems through which authors submit articles are visible and usually a major target for complaints. These are large complex systems that have built up over time to manage very heterogeneous processes, particularly at large publishers. They are mostly pretty awful. Hated in equal measure by both authors and publisher stuff.

The few examples of systems that users actually like are purpose built by small publishers. Many people have said it should be easy to build a submission system. And its true. It is easy to build a system to manage one specific work flow and one specific journal, particularly a small one. But building something that is flexible(ish) and works reliably at a scale of tens or hundreds of thousands of articles is quite a different issue. Small start ups like PeerJ and Pensoft have the ability to innovate and build systems from scratch that authors enjoy using. Elsevier by contrast has spent years, and reportedly tens of millions of dollars, trying to build a new system. PLOS has invested heavily in taking a new approach to the problem. Both are still in practice using different variants of the Editorial Manager system developed by Aries (Elsevier run their own version, PLOS pays for the service).

These systems are the biggest blocker to innovation in the industry. To solve the problems in production requires new ways of thinking about documents at submission, which in turn requires totally new systems for validation and management. The reasons why this acts as a technical block will be discussed in the next post.

What I want to focus on here is the generally hidden process that occurs between article submission and the start of the formal peer review process. Again, this is much more than “just sending an email” to an editor. There are often layers upon layers of technical and ethical checks. Are specific reporting requirements triggered? Has a clinical trial been registered? Does the article mention reagents or cell lines that have ethical implications? Is it research at all? Do the authors exist? Different journals apply different levels of scrutiny here. Some, like Acta Crystallographica E do a full set of technical checks on the data supporting the articles, others seem to not run any checks at all. But it can be very hard to tell what level any given journal works to.

It is very rare for this process to surface, but one public example is furnished by John Bohannan’s “Open Access Sting” article. As part of this exercise he submitted an “obviously incorrect” article to a range of journals, including PLOS ONE. The article included mention of human cell lines, and at PLOS this triggered an examination of whether appropriate ethical approval had been gained for those lines. Usually this would remain entirely hidden, but because Bohannan published the emails (something a publisher would never do as it would be breaking confidence with the author) we can see the back and forth that occurred.

Much of what the emails contain is automatic but that which isn’t, the to-and-fro over ethics approval of the cell lines is probably both unfamiliar to many of those who don’t think publishers do much but also surprisingly common. Image manipulation, dodgy statistical methods, and sometimes deeper problems are often not obvious to referees or editors. And when something goes wrong it is generally the publisher, or the brand that gets the blame.

Managing a long tail of issues

The theme that brings many of these elements together is that the idea that there is a long tail of complex issues, many of which only become obvious when looking across tens of thousands of articles. Any one of these issues can damange a journal badly, and consistent issues will close it down. Many of these could be caught by better standardised reporting, but researchers resist the imposition of reporting requirements like ARRIVE and CONSORT as an unnecsessary burden. Many might be caught by improved technical validation systems, provided researchers provided data and metadata in standardised forms. If every author submitted all their images and text in the same version of the same software file format using the same template (that they hadn’t played with to try and get a bit of extra space in the margins) then much could be done. Even in non-standardised forms progress could be made but it would require large scale re-engineering of submission platforms, a challenge to be discussed in the next section.

Or these checks and balances could be abandoned as unnecessary. Or the responsibility placed entirely on academic editors and referees. This might well work at a small scale for niche journals but it simply doesn’t work as things scale up, as noted above, something that will be a recurring theme.

There are many other things I have missed or forgotten: marketing, dissemination, payments handling, as well as the general overheads of managing an organisation. But most of these are just that, the regular overheads of running organisations as they scale up. The argument for these depends on the argument for need anything more than a basic operation in the first place. The point that most people miss from the outside is this consistent issue of managing the long tail: keeping the servers up 99.99%, dealing with those images or textual issue that don’t fit the standard pipeline, catching problems with the reviewing process before things get out of hand, and checking that the basics are right, that ethics, reporting requirements, data availability have been done properly across a vast range of submissions.

However the converse is also true, for those communities that don’t need this level of support, costs could be much lower. There are communities where the authors know each other, know the issues intimately and where there isn’t the need or desire for the same level of finish. Publishers would do well to look closer at these and offer them back the cost savings that can be realised. At the very least having a conversation about what these services actually are and explaining why they get done would be a good start.

But on the other side authors and communities need to engage in these discussions as communities. Communities as a whole need to decide what level of service they want, and to take responsibility for the level of checking and validation and consistency they want. There is nothing wrong with slapping the results of “print to PDF” up on the web after a review process managed by email. But it comes with both a lot of work and a fair amount of risk. And while a few loud voices are often happy to go back to basics, often the rest of the community is less keen to walk away from what they see as the professional look that they are used to.

That is not to say that much of this could be handled better with better technology and there has been a lack of investment and attention in the right parts of the article life cycle. Not surprisingly publishers tend to focus technology development in parts of the process visible to either authors or readers and neglect these less visble elements. And as we will see in future parts of the series, solving those problems requires interventions right the way through the system. Something that is a serious challenge.

Conclusion

It’s easy to say that much of what publishers do is hidden and that researcher are unaware of a lot of it. It is only when things go wrong, that scandals break, that the curtain gets pulled back. But unless we open up an honest conversation about what is wanted, what is needed, and what is currently being missed we’re also unlikely to solve the increasingly visible problems of fraud, peer review cartels and ethical breaches. In many ways, what has not been visible because they were problems at a manageable scale, are growing to the point of being unmanageable. And we can tackle them collectively or continue to shout at each other.

Part of the difficulty, though, is the lack of transparency about how much things costs. I’ve never been a publisher, but I have organised conferences, built proceedings, got them hosted and maintained then on the web for a long period. And it is a pain, and its clearly going to be expensive at scale.

I think that part of the problem, though, is not that scientists do not understand what publishers do, its that there is very little clarity about where these costs actually lie. Publishers have a clear incentive to over-estimate and over-claim the work that they do, of course, but that is not what I wonder about. I wonder how much do we pay for the various parts of the process? I’ve used the metaphor of the coffee shop before: how much does the coffee actually cost, and how much are you paying for the logos, the ambience, the sofas and whatever else goes with the experience?

The lack of standardisation is a case in point. As you say, the inability

of scientists to submit things in a standard form costs publishers a fortune.

But, then, the inability of publishers to accept a standardised submission

format costs the scientists a fortune as well. I think this is one of the reasons

that arXiv is so cheap to run — they have very minimal submissions requirements. Cheap for arXiv, and easy for the scientists to conform with, at the cost that

different papers in arXiv look different. As you say, many scientists shy away

from the DIY nature (and often apperance of the papers) in arXiv. Perhaps they

would not do so, if it was clear that the costs of this were in the thousands.

And, also, the cost to their own time of following the various and

incomprehensible submission rules, all different, from each of the journals.

And part of the problem is agreeing on the correct tools for the job. Where I work we can’t even agree at a single institute, let alone across our scientific community. And, as Ian Mulvany points out [here: http://elifesciences.org/eLife-news/Introducing-Lens-Writer%5D the toolchain that the publisher is using is probably quite different to that used by the authors anyway. I’m certainly interested in the pipeline eLife is trying to build – they have, as Cameron points out, the advantage of being able to build it from scratch. And I’d certainly be very happy if my lab could agree on a set of tools that lets us manage our own part of the publications workflow. Recent years have seen a lot of encouraging work in this field but I’m not holding my breath.

Thanks for writing this! My own research is closely related so I’m looking forward to seeing how the rest of the series takes shape.

Yep, all good questions. I will get on to costs in part later, and I agree transparency is a real problem here. But even without that we can start to dig into what bits we do care about? Do you want to ambience? Are you happy if there is no safety regulation in the supply chain? And who’s fault is it if I get sick after having a coffee? #stretchedanalogies

Hoping that we could all agree to use a single tool-chain is, of course, the a foolish hope and one that is not going to happen. But, perhaps, this is not so much of the issue. Agreeing a single tool at any one point in the system would be enough.

At the moment, ironically, this is Word (PDFs generated from latex often get back-translated into Word). As strange as this is, it wouldn’t be an enormous problem, if the journals all used the same typographical features inside the word doc. But they don’t.

For me, though, this is an old argument. What I am actually interested in is what are the costs associated with the lack of a single tool-chain, or an agreement at any point. This is what I do not know, nor do I know how to estimate.

Do I want ambience, do I want safety regulation, do I just want coffee? The answer to this is more complex, and it depends which part of the process we are talking about. If we are talking about scientific communication, well, I have everything I want and need on my blog: I have IDs, archiving, stability, metadata and so on. And one key thing that I find hard to get from a publisher. I have control: I can publish well thought-out “final” material, or preliminary “working” material; I can publish didactic or research content as I choose; I can program around any bits of the process that irritate me; I can publish very rapidly. Well, I could go on.

But scientific publishing is also about gaining scientific brownie points: here I care about different things. I do care about Impact Factors, about nice looking webpages, about PDFs and strong brand names. Or, rather, I worry that other people care about these things.

I don’t think the “researcher” and “publisher” in your article covers enough ground. “Author”, “Publisher” and “Assessor” would be better. And “Reader” might be worth worrying about. Probably they care about different things.

“I do care about Impact Factors,”

really?? Or do you really just care that they are used to decide careers? (perhaps including the one that matters most for yourself)

For those playing along at home: remember the famous quote from http://occamstypewriter.org/scurry/2012/08/13/sick-of-impact-factors/: “So consider all that we know of impact factors and think on this: if you use impact factors …”

I’d love to see a journal that accepted vanilla RTF files, and then saved a) the authors’ time in formatting in the first place and b) the typesetting peoples’ time in removing the byzantine author-created formatting.

Oh definitely, I’ll get to that difference in roles (even in the same person) later on. It’s a big issue. And the question of prestige, reach or whatever is definitely something I”ll come back to.

Actually lots do, and essentially no journal from a “big publisher” (i.e. any one that doesn’t just slap up a PDF the authors create) does anything except throw out all the formatting that authors do anyway. This is a great segue into the next post which explains why this doesn’t solve the problem…or can’t yet. Now I just need to finish the post!

Those costs (not a single tool chain) are hard to estimate because they include one-off development costs (but people are working on a lot of that at the moment) costs of running that system, which will be different to current costs in some places (including some new QA steps) and also the savings (which are the easiest to pin down probably, production is ~$200 per article, format mangling probably averages out – pace my comments about this being a long tail problem – at ~$150 over all articles, and then data migrations across systems, probably ~$100)

So then why do journals demand that articles are formatted just-so? If there’s one thing I can applaud Elsevier for, its letting people submit articles for review in arbitrary format.

Habit? Honestly I don’t know. There are some elements of standardisations that help. A quick check on length, number of figures, ease of finding specific things?

But yes there’s almost no reason for this and there would be much better ways to handle most of what this is supposed to achieve. I’ll be talking a bit about why its harder than you might think to just implement new systems to do that in the next post.

Thanks, this is an excellent series. I do think that it can’t be beyond the wit of mankind to improve journal production processes without taking the journal offline. How do proper software companies do it? My guess is that Elsevier et al are happy to coast along lazily, sucking huge profits out, and it is only a lack of motivation. Why couldn’t they design something better with a fraction of the profit they take in each year?