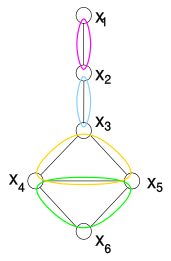

- Image via Wikipedia

“We demonstrate the separation of the complexity class NP from its subclass P. Throughout our proof, we observe that the ability to compute a property on structures in polynomial time is intimately related to the statistical notions of conditional independence and sufficient statistics. The presence of conditional independencies manifests in the form of economical parametrizations of the joint distribution of covariates. In order to apply this analysis to the space of solutions of random constraint satisfaction problems, we utilize and expand upon ideas from several fields spanning logic, statistics, graphical models, random ensembles, and statistical physics.”

Vinay Deolalikar [pdf]

No. I have no idea either, and the rest of the document just gets more confusing for a non-mathematician. Nonetheless the online maths community has lit up with excitement as this document, claiming to prove one of the major outstanding theorems in maths circulated. And in the process we are seeing online collaborative post publication peer review take off.

It has become easy to say that review of research after it has been published doesn’t work. Many examples have failed, or been partially successful. Most journals with commenting systems still get relatively few comments on the average paper. Open peer review tests have generally been judged a failure. And so we stick with traditional pre-publication peer review despite the lack of any credible evidence that it does anything except cost around a few billion pounds a year.

Yesterday, Bill Hooker, not exactly a nay-sayer when it comes to using the social web to make research more effective wrote:

“…when you get into “likes” etc, to me that’s post-publication review — in other words, a filter. I love the idea, but a glance at PLoS journals (and other experiments) will show that it hasn’t taken off: people just don’t interact with the research literature (yet?) in a way that makes social filtering effective.”

But actually the picture isn’t so negative. We are starting to see examples of post-publication peer review and see it radically out-perform traditional pre-publication peer review. The rapid demolition [1, 2, 3] of the JACS hydride oxidation paper last year (not least pointing out that the result wasn’t even novel) demonstrated the chemical blogosphere was more effective than peer review of one of the premiere chemistry journals. More recently 23andMe issued a detailed, and at least from an outside perspective devastating, peer review (with an attempt at replication!)Â of a widely reported Science paper describing the identification of genes associated with longevity. This followed detailed critiques from a number of online writers.

These, though were of published papers, demonstrating that a post-publication approach can work, but not showing it working for an “informally published” piece of research such as a a blog post or other online posting. In the case of this new mathematical proof, the author Vinay Deolalikar, apparently took the standard approach that one does in maths, sent a pre-print to a number of experts in the field for comments and criticisms. The paper is not in the ArXiv and was in fact made public by one of the email correspondents. The rumours then spread like wildfire, with widespread media reporting, and widespread online commentary.

Some of that commentary was expert and well informed. Firstly a series of posts appeared stating that the proof is “credible”. That is, that it was worth deeper consideration and the time of experts to look for holes. There appears a widespread skepticism that the proof will be correct, including a $200,000 bet from Scott Aaronson, but also a widespread view that it nonetheless is useful, that it will progress the field in a helpful way even if it is wrong.

After this first round, there have been summaries of the proof, and now the identification of potential issues is occurring (see RJLipton for a great summary). As far as I can tell these issues are potentially extremely subtle and will require the attention of the best domain experts to resolve. In a couple of cases these experts have already potentially “patched” the problem, adding their own expertise to contribute to the proof. And in the last couple of hours as Michael Nielsen pointed out to me there is the beginning of a more organised collaboration to check through the paper.

This is collaborative, and positive peer review, and it is happening at web scale. I suspect that there are relatively few experts in the area who aren’t spending some of their time on this problem this week. In the market for expert attention this proof is buying big, as it should be. An important problem is getting a good going over and being tested, possibly to destruction, in a much more efficient manner than could possibly be done by traditional peer review.

There are a number of objections to seeing this as a generalizable to other research problems and fields. Firstly, maths has a strong pre-publication communication and review structure which has been strengthened over the years by the success of the ArXiv. Moreover there is a culture of much higher standards of peer review in maths, review which can take years to complete. Both of these encourage circulation of drafts to a wider community than in most other disciplines, priming the community for distributed review to take place.

The other argument is that only high profile work will get this attention, only high profile work will get reviewed, at this level, possibly at all. Actually I think this is a good thing. Most papers are never cited, so why should they suck up the resource required to review them? Of those that are or aren’t published whether they are useful to someone, somewhere, is not something that can be determined by one or two reviewers. Whether they are useful to you is something that only you can decide. The only person competent to review which papers you should look at in detail is you. Sorry.

Many of us have argued for some time that post-publication peer review with little or no pre-publication review is the way forward. Many have argued against this on practical grounds that we simply can’t get it to happen, there is no motivation for people to review work that has already been published. What I think this proof, and the other stories of online review tell us is that these forms of review will grow of their own accord, particularly around work that is high profile. My hope is that this will start to create an ecosystem where this type of commenting and review is seen as valuable. That would be a more positive route than the other alternative, which seems to be a wholesale breakdown of the current system as the workloads rise too high and the willingness of people to contribute drops.

The argument always brought forward for peer review is that it improves papers. What interests me about the online activity around Deolalikar’s paper is that there is a positive attitude. By finding the problems, the proof can be improved, and new insights found, even if the overall claim is wrong. If we bring a positive attitude to making peer review work more effectively and efficiently then perhaps we can find a good route to improving the system for everyone.

Related articles by Zemanta

- Issues In The Proof That P≠NP (rjlipton.wordpress.com)

- Scott Aaronson adds $200K to prize money if P=NP proof correct (scottaaronson.com)

- HP Researcher Claims to Crack Compsci Complexity Conundrum (pcworld.com)

- S. Cook: “This appears to be a relatively serious claim to have solved P vs NP.” (gregbaker.ca)

- A Proof That P Is Not Equal To NP? (rjlipton.wordpress.com)

- R.J. Lipton – A proof that P is not equal to NP (rjlipton.wordpress.com)

- We need to fix peer review now (newscientist.com)

- Peer-review has a place (mndoci.com)

- Claimed Proof That P != NP (science.slashdot.org)

- Abundance Obsoletes Peer Review, so Drop It (opendotdotdot.blogspot.com)

- THE PROBLEM with peer review. “Especially for papers that rely on empirical work with painstakingly… (pajamasmedia.com)