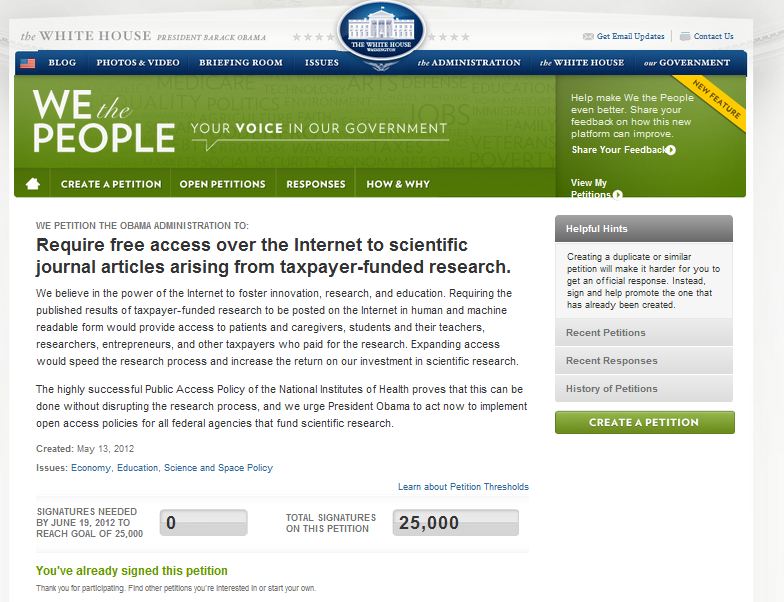

I’m afraid I went to bed. It was getting on for midnight and it looked like another four hours or so before the petition would reach the magic mark of 25,000 signatures. As it turns out a final rush put us across the line at around 2am my time, but never mind, I woke up wondering whether we had got there, headed for the computer and had a pleasant surprise waiting for me.

What does this mean? What have John Wilbanks, Heather Joseph, Mike Carroll, and Mike Rossner achieved by deciding to push through what was a real hard slog? And what about all those people and groups involved in getting signatures in? I think there are maybe three major points here.

Access to Research is now firmly on the White House (and other governments’) agenda

The petition started as a result of a meeting between the Access2Research founders and John Holdren from the White House. John Wilbanks has written about how the meeting went and what the response was. The US administration has sympathy and understands many of the issues. However it must be hard to make the case that this was something worth the bandwidth it would take to drive a policy initiative. Especially in an election year. The petition and the mechanism of the “We the people” site has enabled us to show that it is a policy item that generates public interest, but more importantly it creates an opportunity for the White House to respond. It is worth noting that this has been one of the more successful petitions. Reaching the 25k mark in two weeks is a real achievement, and one that has got the attention of key people.

And that attention spreads globally as well. The Finch Report on mechanisms for improving access to UK research outputs will probably not mention the petition, but you can bet that those within the UK government involved in implementation will have taken note. Similarly as the details of the Horizon2020 programme within the EU are hammered out, those deciding on the legal instruments that will distribute around $80B, will have noted that there is public demand, and therefore political cover, to take action.

The Open Access Movement has a strong voice, and a diverse network, and can be an effective lobby

It is easy, as we all work towards the shared goal of enabling wider access and the full exploitation of web technologies, to get bogged down in details and to focus on disagreements. What this effort showed was that when we work together we can muster the connections and the network to send a very strong message. And that message is stronger for coming from diverse directions in a completely transparent manner. We have learnt the lessons that could be taken from the fight against SOPA and PIPA and refined them in the campaign to defeat, in fact to utterly destroy, the Research Works Act. But this was not a reaction, and it was not merely a negative campaign. This was a positive campaign, originating within the movement, which together we have successfully pulled off. There are lessons to be learnt. Things we could have done better. But what we now know is that we have the capacity to take on large scale public actions and pull them off.

The wider community wants access and has a demonstrated capacity to use it

There has in the past been an argument that public access is not useful because “they can’t possibly undertand it”, that “there is no demand for public access”. That argument has been comprehensively and permanently destroyed. It was always an arrogant argument, and in my view a dangerous one for those with a vested interest in ensuring continued public funding of research. The fact that it had strong parallels with the arguments deployed in the 18th and 19th centuries that colonists, or those who did not own land, or women, could not possibly be competent to vote should have been enough to warn people off using it. The petition has shown demand, and the stories that have surfaced through this campaign show not only that there are many people who are not professional researchers who can use research, but that many of these people also want, and are more than capable, to contribute back to the professional research effort.

The campaign has put the ideas of Open Access in front of more people than perhaps ever before. We have reached out to family, friends, co-workers, patients, technologists, entrepreneurs, medical practitioners, educators, and people just interested in the world around them. Perhaps one in ten of them actually signed the petition, but many of them will have talked to others, spreading the ideas. This is perhaps one of the most important achievements of the petition. Getting the message and the idea out in front of hundreds of thousands of people who may not take action today, but will now be primed to see the problems that arise from a lack of access, and the opportunities that could be created through access.

Where now?

So what are our next steps? Continuing to gain signatures for the next two weeks is still important. This may be one of the most rapidly growing petitions but showing that continued growth is still valuable. But more generally my sense is that we need to take stock and look forward to the next phase. The really hard work of implementation is coming. As a movement we still disagree strongly on elements of tactics and strategy. The tactics I am less concerned about, we can take multiple paths, applying pressure at multiple points and this will be to our advantage. But I think we need a clearer goal on strategy. We need to articulate what the endgame is. What is the vision? When will we know that we have achieved what we set out to do?

Peter Murray-Rust has already quoted Churchill but it does seem apposite. “…this is not the end. This is not even the beginning of the end. But it is perhaps, the end of the beginning.”

We now know how much we can achieve when we work together with a shared goal. The challenge now is to harness that to a shared understanding of the direction of travel, if perhaps not the precise route. But if we, with all the diversity of needs and views that this movement contains, we can find the core of goals that we all agree on, then what we now know is that we have the capacity, the depth, and the strength to achieve them.